Bias against Novelty in Science: A Cautionary Tale

Evaluating scientific impact using short citation windows and focusing only on the most prominent journals may fail to recognize the value of novel research.

Research based on an unusual or novel approach may lead to important breakthroughs in science, but peer evaluators are often overly cautious in evaluating such work, Jian Wang, Reinhilde Veugelers, and Paula Stephan find in Bias against Novelty in Science: A Cautionary Tale for Users of Bibliometric Indicators (NBER Working Paper 22180). They argue that this excess caution represents a flaw in the traditional means of evaluating research, and that this flaw has far-reaching implications for the allocation of resources.

The researchers define novel research as being based on "the recombination of pre-existing knowledge components in an unprecedented fashion." An outstanding example of such work occurred in the 1950s when Leo Szilard applied his expertise as a nuclear physicist to the field of biology and the cloning of cells. The study finds that novel papers, which represent 11 percent of all papers, are often cited infrequently in research journals for a significant period of time. Because frequency of citation is the standard for evaluating scientific papers, this finding suggests that evaluation of research based on short time windows is biased against novel research.

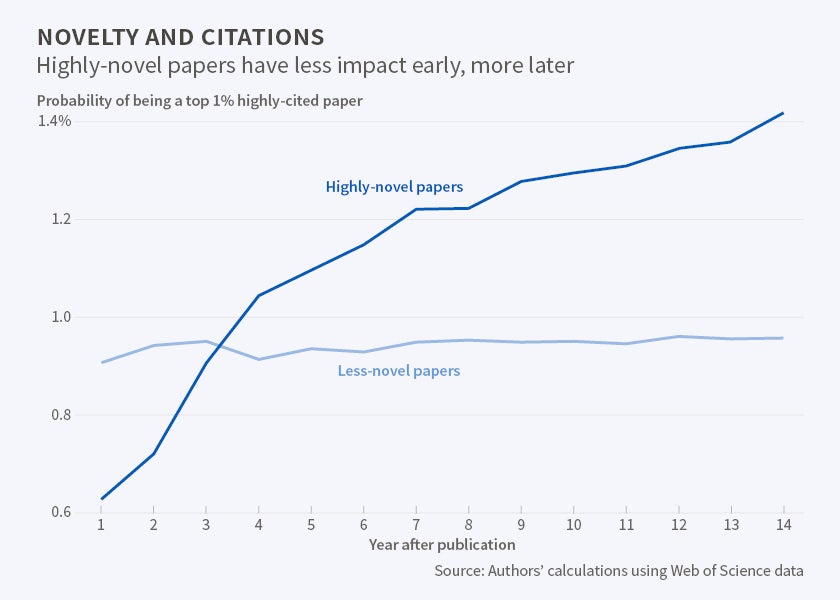

The study analyzes citation patterns involving Web of Science papers that were indexed in 2001—some 773,311 research articles across all disciplines. It identifies papers that use new combinations of knowledge pieces, then tracks how soon, how often, and in which fields such research is cited by other publications. The researchers find that novel studies are less likely to be published in high-impact-factor journals, but eventually have a much higher chance of being in the top one percent of highly cited research, and that they are more likely to lead to important follow-up research. But it takes time for this impact to develop.

The researchers argue that classic bibliometric evaluations that focus on citation counts over a relatively short post-publication period and in the most prominent journals can lead to bias against novel research. They suggest that citation-based bias can cause funding agencies to favor "safer" projects over novel ones. Using short time windows for measuring citations and the impact factor of the publishing journal makes it more difficult to marshal support for novel research projects, even though this research is often among the most fruitful of scientific inquiries.

The researchers suggest the use of a wide portfolio of indicators and of time windows beyond the standard two or three years, when bibliometric indicators are used as part of evaluation processes.

They also find that the major impact of novel research comes largely from disciplines beyond its own field. Therefore restricting peer review to scientists solely within the discipline, they conclude, "may fail to recognize the full value of novel research."

—Matt Nesvisky